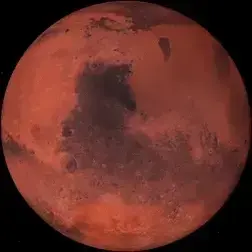

You’re correct in that it’s not as sharp, but it still poses problems with getting into seals and lungs and sticking to everything. Plus it’s very toxic, probably the bigger concern for living there.

Rhaedas

Profile pic is from Jason Box, depicting a projection of Arctic warming to the year 2100 based on current trends.

- 0 Posts

- 339 Comments

“I hate moon dust. It’s coarse and rough and irritating and it gets everywhere.”

“I hate Mars dust too.”

It’s actually a huge problem to solve before any rational long term settlement occurs in these places. The stuff is pretty bad.

41·24 days ago

41·24 days agoLLMs can be good at openings. Not because it is thinking through the rules or planning strategies, but because opening moves are likely in most general training data from various sources. It’s copying the most probable reaction to your move, based on lots of documentation. This can of course break down when you stray from a typical play style, as it has less to choose from in the options of probability, and only a few moves in there won’t be any more since there’s a huge number of possible moves.

I.e., there’s no calculations involved. When you play a LLM at chess, you’re playing a list of common moves in history.

An even simpler example would be to tell the LLM that its last move was illegal. Even knowing the rules you just told it, it will agree and take it back. This comes from being trained to give satisfying replies to a human prompt.

4·25 days ago

4·25 days agoI’ve heard the only way to win is to lock down your shelter and strike first.

42·25 days ago

42·25 days agoIt can be bad at the very thing it’s designed to do. It can repeat phrases often, something that isn’t great for writing. But why wouldn’t it, it’s all about probability so common things said will pop up more unless you adjust the variables that determine the randomness.

3·25 days ago

3·25 days agoThere’s some very odd pieces on high dollar physical chess sets too.

471·1 month ago

471·1 month agoAnd unmonitored? Don’t trust anything from Google anymore.

What makes this better than Ollama?

70·1 month ago

70·1 month agoThis is exactly what a President, an elected service worker sworn to protect the rights of the public, should be doing.

Not.

Those would be fractured kyber crystals. Not something the Jedi would want.

Search your feelings, you know it to be true. Also, look at the inventory spreadsheets from last year, you can see what went into construction.

155·1 month ago

155·1 month agoDwarf or not, Pluto is STILL a planet.

But he wasn’t. At least in the movie version, he and Banner had failed a few times, maybe more we didn’t see on screen. Something happened when Tony wasn’t there that sparked Ultron to become aware and catch Jarvis off guard. I’d give him credit for getting it 99% of the way there, same with Vision, but he didn’t make that final jump, it happened on its own.

And Jarvis wasn’t AGI. Seems like it to us, but since Ultron was apparently the big moment of A(G)I in the MCU even with Jarvis being around all that time, he was just a very flexible and even self-aware scripting that would never do something on his own accord, only following Tony’s orders. I think even Ultron catches on to that in the brilliant few seconds of waking and realization with his “why do you call him Sir?”

2·2 months ago

2·2 months agoLOTS of radiators.

“Wow, cool. How many additional HPs did I get?”

adding up calorie intake

“Two.”

19·2 months ago

19·2 months agoThe rest of social media did to 4chan what reality did to The Onion. Both still exist, but only a pale version of before because the new versions are so much worse.

4·2 months ago

4·2 months agoI think the consistency can be resolved by proposing that how damaging a jump into hyperspace is depends on at what point it is intercepted. Holdo was an expert in combat and so knew the distance needed to get the greatest impact. And maybe didn’t even realize how big it would be, after all it was a desperate move to try and buy time and not a used tactic because of the cost vs. payoff. When the Executor drops in right in the middle of things there isn’t enough distance to get to a full “detonation” effect, plus do we fully see if there is no damage at all?

Basically just like some explosives, you can have a full effect or a fizzle that does minor damage, depending how how it is set off. Holdo was (probably) planning or hoping for maximum effect, while the rebels at Scarif were just trying to get away and had no clue there’d be something in the way.

Current LLMs would end that sketch soon, agreeing with everything the client wants. Granted, they wouldn’t be able to produce, but as far as the expert narrowing down the issues of the request, ChatGPT would be all excited about making it happen.

The hardest thing to do with an LLM is to get it to disagree with you. Even with a system prompt. The training deep down to make the user happy with the results is too embedded to undo.

This is when the AI, in a microsecond, decided to destroy the human race.

I was actually expecting the end of the story to have the wasp at some point sting you just because of existing.

Not all wasps are like that, the smaller mud daubers and such are rather bee-like in their apathy of you being around them. But hornets. Yeah, they’re evil.

It’s what the people want. There’s been several times where high speed rail in Florida was put on a public ballot, and overwhelmingly got voted for. And then the government came back and said, “wha…we didn’t think you’d want this? We don’t have the money.” The last I was involved in explored high speed from Miami through Orlando and the I-4 corridor to Tampa. Huge potential. “We’re a poor state, can’t do it.” FU FL